Those who cannot remember the past are condemned to repeat it.

George Santayana

History isn’t always clear-cut. It’s written by anyone with the will to write it down and the forum to distribute it. It’s valuable to understand different perspectives and the contexts that created them. The evolution of the term Data Science is a good example.

I learned statistics in the 1970s in a department of behavioral scientists and educators rather than a department of mathematics. At that time, the image of statistics was framed by academic mathematical-statisticians. They wrote the textbooks and controlled the jargon. Applied statisticians were the silent majority, a sizable group overshadowed by the academic celebrities. For me, reading Tukey’s 1977 book Exploratory Data Analysis was a revelation. He came from a background of mathematical statistics yet wrote about applied statistics, a very different animal.

My applied-statistics cohorts and I were a diverse group—educational statisticians, biostatisticians, geostatisticians, psychometricians, social statisticians, and econometricians, nary a mathematician in the group. We referred to ourselves collectively as data-scientists, a term we heard from our professor. We were all data scientists, despite our different educational backgrounds, because we all worked with data. But the term never stuck and faded away for over the years.

Age of Number-Crunching: 1960s-1970s

Applied statistics had been very important during World War II, most notably in code breaking but also in military applications and more mundane logistics and demographic analyses. After the war, the dominance of deterministic engineering analysis grew and drew most of the public’s attention. There were many new technologies in consumer goods and transportation, especially aviation and the space race, so statistics wasn’t on most people’s radar. Statistics was considered to be a field of mathematics. The public’s perception of a statistician was a mathematician, wearing a white lab coat, employed in a university mathematics department, who was working on who-knows-what.

One of the technologies that came out of WWII was ENIAC, which led to the IBM/360 mainframes of the early 1960s. These computers were still huge and complex, but compared to ENIAC, quite manageable. They were a technological leap forward and inexpensive enough to become part of most university campuses. Mainframes became the mainstays of education. Applied statisticians and programmers led the way; computer rooms across the country were packed with them.

In 1962, John Tukey wrote in “The Future of Data Analysis”

“For a long time, I have thought I was a statistician, interested in inferences from the particular to the general. But as I have watched mathematical statistics evolve, I have had cause to wonder and to doubt…I have come to feel that my central interest is in data analysis, which I take to include, among other things: procedures for analyzing data, techniques for interpreting the results of such procedures, ways of planning the gathering of data to make its analysis easier, more precise or more accurate, and all the machinery and results of (mathematical) statistics which apply to analyzing data.”

I read that paper as part of my graduate studies. Perhaps applied statisticians saw this paper as an opportunity to develop their own identity, apart from determinism and mathematics, and even mathematical statistics. But it really wasn’t an organized movement, it just evolved.

So as my cohorts and I understood it, the term data-sciences was really just an attempt to coin a collective noun for all the number-crunching, just as social-sciences was a collective noun for sociology. anthropology, and related fields. The data sciences included any field that analyzed data,regardless of the domain specialization,as opposed to pure mathematical manipulations. Mathematical statistics was NOT a data science because it didn’t involve data. Biostatistics, chemometrics, psychometrics, social and educational statistics, epidemiology, agricultural statistics, econometrics, and other applications were part of data science. Business statistics, outside of actuarial science, was virtually nonexistent. There were surveys but business leaders preferred to call their own shots. Data-driven business didn’t become popular until the 21st century. But if it had been a substantial field, it would have been a data science.

Computer programming might have involved managing data but to statisticians it was not a data science because it didn’t involve any analysis of data. There was no science involved. At the time, it was called data processing. It involved getting data into a database and reporting them, but not analyzing them further. Naur (1974) had a different perspective. Naur was a computer scientist who considered data science to encompass dealing with existing data, and not how the data were generated or were to be analyzed. This was just the opposite of the view of applied statisticians. Different perspectives.

Programming in the 1950s and 1960s was evolving from the days of flipping switches on a mainframe behemoth, but was still pretty much limited to Fortran, COBOL, and a bit of Algol. There were issues with applied statisticians doing all their own programming. They tended to be less efficient than programmers and were sometimes unreliable. To paraphrase Dr. McCoy, I’m an applied statistician not a computer programmer.” This philosophy was reinforced by British statistician Michael Healy when he said:

No single statistician can be expected to have a detailed knowledge of all aspects of statistics and this has consequences for employers. Statisticians flourish best in teams—a lone applied statistician is likely to find himself continually forced against the edges of his competence.

M.J.R. Healy. 1973. The Varieties of Statistician. J. Royal Statistical Society. 136(1), p. 71-74.

So when the late 1960s brought statistical-software-packages, most notably BMDP and later SPSS and SAS, applied statisticians were in Heaven. Still, the statistical packages were expensive programs that could only run on mainframes, so only the government, universities, and major corporations could afford their annual licenses, the mainframes to run them, and the operators to care for the mainframes. I was fortunate. My university had all the major statistical packages that were available at the time, some of which no longer exist. We learned them all, and not just the coding. It was a real education to see how the same statistical procedures were implemented in the different packages.

Throughout the 1970s, statistical analyses were done on those big-as-dinosaurs, IBM/360 mainframe computers. They had to be sequestered in their own climate-controlled quarters, waited on command and reboot by a priesthood of system operators. No food and no smoking allowed! Users never got to see the mainframes except, maybe, through a small window in the locked door. They used magnetic tapes. I saw ‘em.

Conducting a statistical analysis was an involved process. To analyze a data set, you first had to write your own programs. Some people used standalone programming languages, usually Fortran. Others used the languages of SAS or SPSS. There were no GUIs (Graphical User Interfaces) or code writing applications. The statistical packages were easier to use than the programming languages but they were still complicated

Once you had handwritten the data-analysis program, you had to wait in line for an available keypunch machine so you could transfer your program code and all your data onto 3¼-by-7⅜-inch computer punch-cards. After that, you waited so you could feed the cards through the mechanical card-reader. On a good day, it didn’t jam … much. Finally, you waited for the mainframe to run your program and the printer to output your results. Then the priesthood would transfer the printouts to bins for pickup. When you picked up your output sometimes all you got was a page of error codes. You had to decipher the codes, decide what to do next, and start the process all over again. Life wasn’t slower back then, it just required more waiting.

In the 1970s, personal computers, or what would eventually evolve into what we now know as PCs, were like mammals during the Jurassic period, hiding in protected niches while the mainframe dinosaurs ruled. Before 1974, most PCs were built by hobbyists from kits. The MITS Altair is generally acknowledged as the first personal computer, although there are more than a few other claimants. Consumer-friendly PCs were a decade away. (My first PC was a Radio Shack TRS-80, AKA Trash 80, that I got in 1980; it didn’t do any statistics but I did learn BASIC and word processing.) Big businesses had their mainframes but smaller businesses didn’t have any appreciable computing power until the mid-1980s. By that time, statistical software for PCs began to spring out of academia. There was a ready market of applied statisticians who learned on a mainframe using SAS and SPSS but didn’t have them in their workplaces.

Age of Data-Wrangling: 1980s-2000s

Statistical analysis changed a lot after the 1970s. Punch cards and their supporting machinery became extinct. Mainframes were becoming an endangered species, having been exiled to specialty niches by PCs that could sit on a desk. Secure, climate-controlled rooms weren’t needed nor were the operators. Now companies had IT Departments. The technicians sat in their own areas, where they could eat and smoke, and went out to the users who had a computer problem. It was as if all the Doctors left their hospital practices to make house calls.

Inexpensive statistical packages that ran on PCs multiplied like rabbits. All of these packages had GUIs; all were kludgy and even unusable by today’s standards. Even the venerable ancients, SAS and SPSS, evolved point-and-click faces (although you could still write code if you wanted). By the mid-1980s, you could run even the most complex statistical analysis in less time than it takes to drink a cup of coffee … so long as your computer didn’t crash.

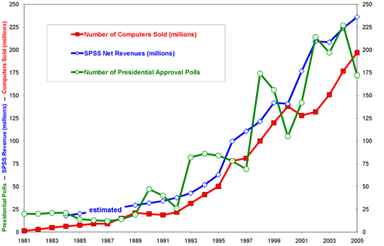

PC sales had reached almost a million per year by 1980. But then in 1981, IBM introduced their 8088 PC. Over the next two decades, the number of IBM-compatible PCs that were sold increased annually to almost 200 million. From the early 1990s, sales of PCs had been fueled by Pentium-speed, GUIs, the Internet, and affordable, user-friendly software, including spreadsheets with statistical functions. MITS and the Altair were long gone, now seen only in museums, but Microsoft survived, evolved, and became the apex predator.

The maturation of the Internet also created many new opportunities. You no longer had to have access to a huge library of books to do a statistical analysis. There were dozens of websites with reference materials for statistics. Instead of purchasing one expensive book, you could consult a dozen different discussions on the same topic, free. No dead trees need clutter your office. If you couldn’t find website with what you wanted, there were discussion groups where you could post your questions. Perhaps most importantly, though, data that would have been difficult or impossible to obtain in the 1970s were now just a few mouse-clicks away, usually from the federal government.

So, with computer sales skyrocketing and the Internet becoming as addictive as crack, it’s not surprising that the use of statistics might also be on the rise. Consider the trends shown in this figure. The red squares represent the number of computers sold from 1981 to 2005. The blue diamonds, which follow a trend similar to computer sales, represent revenues for SPSS, Inc. So at least some of those computers were being used for statistical analyses.

Another major event in the 1980s was the introduction of Lotus 1-2-3. The spreadsheet software provided users with the ability to manage their data, perform calculations, and create charts. It was HUGE. Everybody who analyzed data used it, if for nothing else, to scrub their data and arrange them in a matrix. Like a firecracker, the life of Lotus 1-2-3 was explosive but brief. A decade after its introduction, it lost its prominence to Microsoft Excel, and by the time data science got sexy in the 2010s, it was gone.

With the availability of more computers and more statistical software, you might expect that there may be more statistical analyses being done. That’s a tough trend to quantify, but consider the increases in the numbers of political polls and pollsters. Before 1988, there were on-average only one or two presidential approval polls conducted each month. Within a decade, that number had increased to more than a dozen. In the figure, the green circles represent the number of polls conducted on presidential approval. This trend is quite similar to the trends for computer sales and SPSS revenues. Correlation doesn’t imply causation but sometimes it sure makes a lot of sense.

Perhaps even more revealing is the increase in the number of pollsters. Before 1990, the Gallup Organization was pretty much the only organization conducting presidential approval polls. Now, there are several dozen. These pollsters don’t just ask about Presidential approval, either. There are a plethora of polls for every issue of real importance and most of the issues of contrived importance. Many of these polls are repeated to look for changes in opinions over time, between locations, and for different demographics. And that’s just political polls. There has been an even faster increase in polling for marketing, product development, and other business applications. Even without including non-professional polls conducted on the Internet, the growth of polling has been exponential.

Statistics was going through a phase of explosive evolution. By the mid-1980s, statistical analysis was no longer considered the exclusive domain of professionals. With PCs and statistical software proliferating and universities requiring a statistics course for a wide variety of degrees, it became common for non-professionals to conduct their own analyses. Sabermetrics, for example, was popularized by baseball professionals who were not statisticians. Bosses who couldn’t program the clock on a microwave thought nothing of expecting their subordinates to do all kinds of data analysis. And they did. It’s no wonder that statistical analyses were becoming commonplace wherever there were numbers to crunch.

Against that backdrop of applied statistics came the explosion of data wrangling capabilities. Relational databases and Sequel (SQL) data retrieval became the vogue. Technology also exerted its influence. Not only were PCs becoming faster but, perhaps more importantly, hard disk drives were getting bigger and less expensive. This led to data warehousing, and eventually, the emergence of Big Data. Big data brought Data Mining and black-box modeling. BI (Business Intelligence) emerged in 1989, mainly in major corporations.

Then came the 1990s. Technology went into overdrive. Bulletin Boards Systems (BBSs) and Internet Relay Chat (IRC) evolved into instant messaging, social media, and blogging. The amount of data generated by and available from the Internet skyrocketed. Google and other search engines proliferated. Data sets were now not just big, they were BIG. Big Data required special software, like Hadoop, not just because of its volume but also because much of it was unstructured.

At this point, applied statisticians and programmers had symbiotic, though sometimes contentious, relationships. For example, data wranglers always put data into relational databases that statisticians had to reformat into matrices before they could be analyzed. Then, 1995-2000 brought the R programming language. This was notable for several reasons. Colleges that couldn’t afford the licensing and operational costs of SAS and SPSS began teaching R, which was free. This had the consequence of bringing programming back to the applied-statistics curriculum. It also freed graduates from worrying about having a way to do their statistical modeling at their new jobs wherever they might be.

Conducting a data analysis in the 1990s was nowhere near as onerous as it was twenty years before. You could work at your desk on your PC instead of camping out in the computer room. Many companies had their own data wranglers who built centralized data repositories for everyone to use. You didn’t have to enter your data manually very often, and if you did, it was by keyboarding rather than keypunching. Big companies had their big data but most data sets were small enough to handle in Access if not Excel. Cheap, GUI-equipped statistical software was readily available for any analysis Excel couldn’t handle. Analyses took minutes rather than hours. It took longer to plan an analysis than it did to conduct it. Anyone who took a statistics class in college began analyzing their own data. The 1990s produced a lot of cringeworthy statistical analyses and misleading charts and graphs. Oh, those were the days.

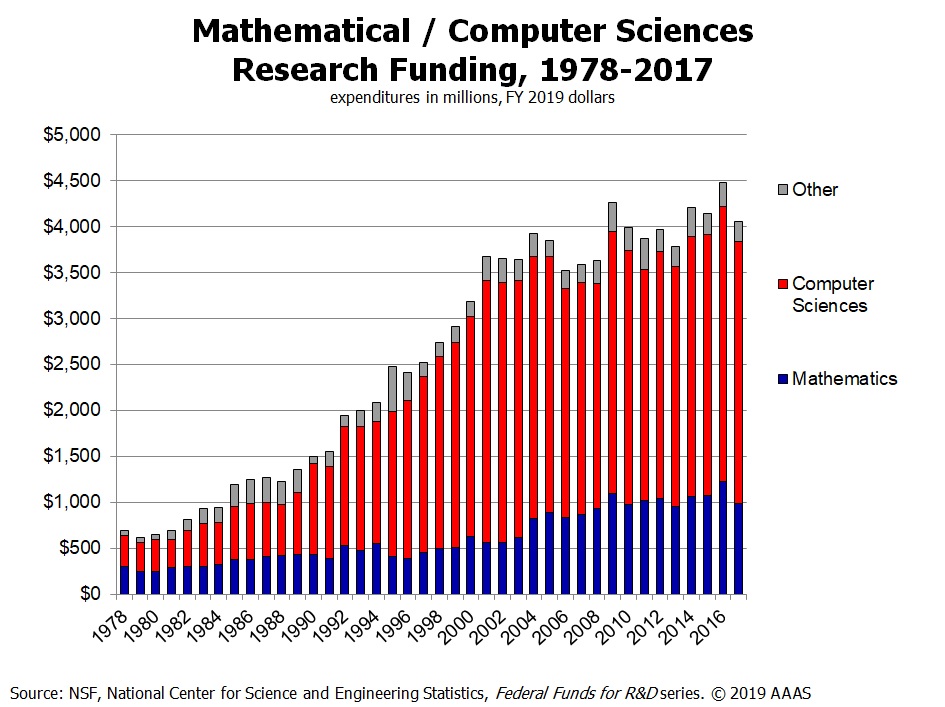

The 2000s brought more technology. Most people had an email account. You could bring a library of ebooks anywhere. Cell phones evolved into smartphones. Flash drives made datasets portable. Tablets augmented PCs and smartphones. Bluetooth facilitated data transfer. Then something else important happened—funding.

Funding for activities related to data science and big data became available from variety of sources, especially government agencies like the National Science Foundation, the Nation Institutes of Health, and the National Cancer Institute. (The NIH released its first strategic plan for data science in 2018.) The UK government also funds training in artificial intelligence and data science. So too do businesses and non-profit organizations. Major universities responded by expanding their programs to accommodate the criteria that would bring them the additional funding. What had been called applied statistics and programming were rebranded as data science and big data.

Donoho captured the sentiment of statisticians in his address at the 2015 Tukey Centennial workshop:

“Data Scientist means a professional who uses scientific methods to liberate and create meaning from raw data. … Statistics means the practice or science of collecting and analyzing numerical data in large quantities.

To a statistician, [the definition of data scientist] sounds an awful lot like what applied statisticians do: use methodology to make inferences from data. … [the] definition of statistics seems already to encompass anything that the definition of Data Scientist might encompass …

The statistics profession is caught at a confusing moment: the activities which preoccupied it over centuries are now in the limelight, but those activities are claimed to be bright shiny new, and carried out by (although not actually invented by) upstarts and strangers.

Age of Data Science: 2010s-Present

The rest of the story of Data Science is more clearly remembered because it is recent. Most of today’s data scientists hadn’t even graduated from college by the 2010s. They might remember, though, the technological advances, the surge in social connectedness, and the money pouring into data science programs in anticipation of the money that would be generated from them. Those factors led to a revolution.

The average age of data scientists in 2018 was 30.5, the median was lower. The younger half of data scientists were just entering college in the 2000s, just when all that funding was hitting academia. (FWIW, I’m in the imperceptibly tiny bar on the upper left of the chart along with 193 others.) But KDnuggets concluded that:

“… rather than attracting individuals from new demographics to computing and technology, the growth of data science jobs has merely creating [sic] a new career path for those who were likely to become developers anyway.”

The event that propelled Data Science into the public’s consciousness, though, was undoubtedly the 2012 Harvard Business Review article that declared data scientist to be the sexiest job of the 21st century. The article by Davenport and Patil described a data scientist as “a high-ranking professional with the training and curiosity to make discoveries in the world of big data.” Ignoring the thirty-year history of the term, though not the concept which was new, the article notes that there were already “thousands of data scientists … working at both start-ups and well-established companies” in just five years. I doubt they were all high-ranking.

Davenport and Patil attributed the emergence of data-scientist as a job title to the varieties and volumes of unstructured Big Data in business. But a consistent definition of data scientist proved to be elusive. Six years later in 2018, KDnuggets described Data Science as an interdisciplinary field at the intersection of Statistics, Computer Science, Machine Learning, and Business, quite a bit more specific than the HBR article. There were also quite a few other opinions about what data science actually was. Everybody wanted to be on the bandwagon that was sexy, prestigious, and lucrative.

…

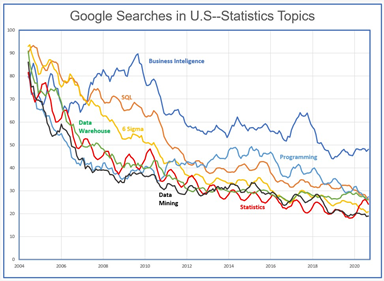

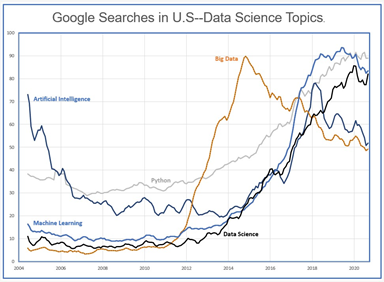

The numbers of Google searches related to topics concerning data reveal the popularity, or at least the curiosity, of the public. Topics related to search term statistics—most notably statistics, data mining, and data warehouse—all decreased in popularity from about 80 searches per month in 2004 to 25 searches per month in 2020. Six Sigma and SQL were somewhat more popular than these topics between 2004 and 2011. Computer Programming rose in popularity slightly from 2014 to 2016. Business Intelligence followed a pattern similar to SQL but had 10 to 30 more searches per month.

Topics related to the search term data science—Data Science, Big Data, and Machine Learning—had fewer than 20 searches per month from 2004 until 2012 when they began increasing rapidly. Big Data peaked in 2014 then decreased steadily. Data Science and Machine Learning increased until about 2018 and then leveled off. The term Python has increased from about 35 searches per month in 2013 to 90 searches per month in 2020. The term Artificial Intelligence decreased from 70 searches per month in 2004 to a minimum of 30 searches per month from 2008 to 2014, then increased to 80 searches per month in 2019.

While people believe Artificial Intelligence is a relative recent field of study, mostly an idea of science fiction, it actually goes back to ancient history. Autopilots in airplanes and ships date back to the early 20th century, now we have driverless cars and trucks. Computers, perhaps the ultimate AI, were first developed in the 1940. Voice recognition began in the 1950s, now we can talk to Siri and Cortana. Amazon and Netflix tell us what we want to do. But perhaps the e single event that caught the public’s attention was in 1997 when Deep Blue became the first computer AI to beat a reigning, world chess champion, Garry Kasparov. This led to AI being applied to other games, like Go and Jeopardy, which increased the public’s awareness of AI.

Aviation went from its first flight to landing on the moon in 65 years. Music went from vinyl to tape to disk to digital in 30 years. Data science overtook statistics in popularity in less than a decade.

It is interesting to compare the patterns of searches for the terms: statistics; AI; big data; ML; and data science. Everybody knows what statistics is. They see statistics every day on the local weather reports. AI entered the public’s consciousness with the game demonstrations and movies, like Terminator and Star Wars. Big data isn’t all that mysterious, especially since the definition is rock solid even if new V-definitions appear occasionally. But ML and data science are more enigmatic. ML is conceptionally difficult to understand because, unlike AI, it is far from what the public sees. The definition of data science, however, suffers from too much diversity of opinion. In the 1970s, Tukey and Naur had diametrically-opposed definitions. Many others since then have added more obfuscation than clarity. Fayyad and Hamutcu conclude that “there is no commonly agreed on definition for data science,” and furthermore, “there is no consensus on what it is.”

So, universities train students to be data scientists, businesses hire graduates to work as data scientists, and people who call themselves data scientists write articles about what they do. But as professions, we can’t agree on what data science is. As Humpty Dumpty said:

“When I use a word,” Humpty Dumpty said, in rather a scornful tone, “it means just what I choose it to mean—neither more nor less.” “The question is,” said Alice, “whether you can make words mean so many different things.” “The question is,” said Humpty Dumpty, “which is to be master—that’s all.”

Lewis Carroll (Charles L. Dodgson), Through the Looking-Glass, chapter 6, p. 205 (1934). First published in 1872.

What Is A Data Scientist?

The term data scientist has never had a consistent meaning. Tukey’s followers thought it applied to all applied statisticians and data analysts. Naur’s followers thought it referred to all programmers and data wranglers. Those were both collective nouns, but they were exclusive. Tukey’s definition excluded data wranglers. Naur’s definition excluded data analysts. Almost forty years later, Davenport and Patil used the term for anyone with the skills to solve problems using Big Data from business. Some of today’s definitions specify that individual data scientists must be adept at wrangling, analysis, modeling, and business expertise. Of course there are disagreements.

Skills—Some definitions redefine what the skills are. Statistics is the primary example. Some definitions limit statistics to hypothesis testing even though modeling and prediction have been part of the discipline for over a century. The implication is that anything that isn’t hypothesis testing isn’t statistics.

Data—Some definitions specify that data science uses Big Data related to business. The implication is that smaller data sets from non-business domains are not part of data science.

Novelty—Some definitions focus on new, especially state-of-the-art technologies and methods over traditional approaches. Data generation is the primary example. Modern technologies, like automatic web scraping with Python, are key methods of some definitions of data science. The implication is that traditional probabilistic sampling methods are not part of data science.

Specialization—Some definitions require data scientists to be multifaceted, generalist, jacks-of-all-trades. This strategy of skills has been abandoned by virtually all scientific professions. As Healy suggested, you can’t expect a computer programmer to be a statistician any more than you can expect a statistician to be a programmer. Yes, there still are generalists, nexialists, interdisciplinarians; they make good project managers and maybe even politicians. But, would you go to a GP (general practitioner) for cancer treatments?

These disagreements have led to some disrespectful opinions—you’re not a real data scientist, you’re a programmer, statistician, data analyst, or some other appellation. So, the fundamental question is whether the term data science refers to a big tent that holds all the skills, and methods, and types of data that might solve a problem or it refers to a small tent that can only hold the specific skills useful for Big Data from business.

What’s in a name? That which we call a rose by any other name would smell as sweet.

William Shakespeare, Romeo and Juliet, Act II, Scene II

The definition of data science is a modern retelling of the parable of the blind men and an elephant:

A group of blind men heard that a strange animal, called an elephant, had been brought to the town, but none of them were aware of its shape and form. Out of curiosity, they said: “We must inspect and know it by touch, of which we are capable”. So, they sought it out, and when they found it they groped about it. The first person, whose hand landed on the trunk, said, “This being is like a thick snake”. For another one whose hand reached its ear, it seemed like a kind of fan. As for another person, whose hand was upon its leg, said, the elephant is a pillar like a tree-trunk. The blind man who placed his hand upon its side said the elephant, “is a wall”. Another who felt its tail, described it as a rope. The last felt its tusk, stating the elephant is that which is hard, smooth and like a spear.

Data science is an elephant. The harder we try to define it the more unrecognizable it becomes. Is it a collective noun or an exclusionary filter? There is no consensus. But that’s the way the world works. Maybe in fifty years, colleges will have programs to train Wisdom Oracles to take the work of pedestrian data scientists and turn it into something really useful.

Anything that is in the world when you’re born is normal and ordinary and is just a natural part of the way the world works. Anything that’s invented between when you’re fifteen and thirty-five is new and exciting and revolutionary and you can probably get a career in it. Anything invented after you’re thirty-five is against the natural order of things.

Douglas Adams

All photos and graphs by author except as noted.

Very nicely done.

You and readers may be interested in the article and accompanying discussion:

David Donoho (2017) 50 Years of Data Science, Journal of Computational and Graphical Statistics, 26:4, 745-766, DOI: 10.1080/10618600.2017.1384734.

Discussants include Zheng, Bryan, Wickham, Peng, Holmes, Josse, Hofmann, VanderPlas, Hicks, Majumder, and Cheng.

Thank you. I updated the link.